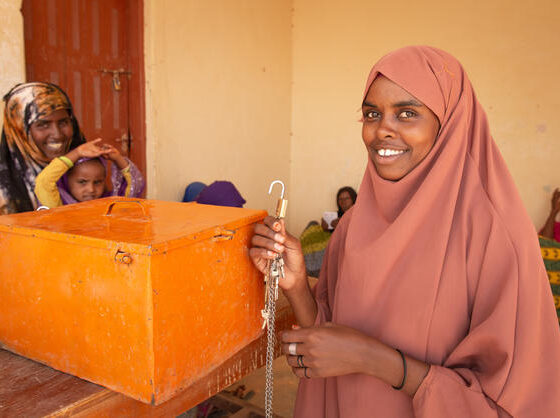

Women Empowerment

Women’s empowerment can be defined to promoting women’s sense of self-worth, their ability to determine their own choices, and their right to influence social change for themselves and others.

It is closely aligned with female empowerment – a fundamental human right that’s also key to achieving a more peaceful, prosperous world.

28 Feburary, 2021

Women’s empowerment

Women’s empowerment can be defined to promoting women’s sense of self-worth, their ability to determine their own choices, and their right to influence social change for themselves and others.

Donate

Small Charities can bring bigger impacts. We can’t help everyone, But everyone can help someone.